Web Accessibility Evaluation of Massive Open Online Courses on Geographical Information Systems

Tania Calle-Jiménez, Sandra Sanchez-Gordon, Sergio Luján-Mora. Proceedings of IEEE Global Engineering Education Conference (EDUCON 2014), p. 680-686, Istanbul (Turkey), April 3-5 2014. ISBN: 978-1-4799-3190-3.

Abstract

This paper describes some of the challenges that exist to make accessible massive open online courses (MOOCs) on Geographical Information Systems (GIS). These courses are known by the generic name of Geo-MOOCs. A MOOC is an online course that is open to the general public for free, which causes a massive registration. A GIS is a computer application that acquire, manipulate, manage, model and visualize geo-referenced data. The goal of a Geo-MOOC is to expand the culture of spatial thinking and the use of geographic information, enabling geospatial web technologies for widespread use. However, the Geo-MOOCs, by nature, have 1inherent problems of accessibility. The Convention on the Rights of Persons with Disabilities (CRPD), Article 24, recognize the right of persons with disabilities to education. "States Parties must ensure that persons with disabilities are able to access general tertiary education, vocational training, adult education and lifelong learning without discrimination and on an equal basis with others". Therefore, it is important to have accessible Geo-MOOCs. In this paper, we present the results of the evaluation of a Geo-MOOC called “Maps and the Geospatial Revolution” using three tools available for free on the Internet: Chrome Developer Tools – Accessibility Audit, eXaminator and WAVE; and included a selection of web content and geographical data representative of the course. This provided feedback for establishing recommendations to improve the accessibility of the analyzed course. Other Geo-MOOCs can also benefit from these recommendations.

Keywords: Automatic Accessibility Evaluation Tools, Chrome Accessibility Audit, Geo-MOOC Geographical Information Systems, Massive Open Online Courses, WAVE, Web Accessibility, eXaminator

More information: Web Accessibility Evaluation of Massive Open Online Courses on Geographical Information Systems

- I. INTRODUCTION

- II. FUNDAMENTALS

- III. STUDY CASE

- IV. EVALUATION METHOD

- V. EVALUATION RESULTS

- VI. CONCLUSIONS

- ACKNOWLEDGMENT

- REFERENCES

I. INTRODUCTION

This paper describes some of the challenges that exist to make accessible online courses in Geographical Information Systems (GIS). The results of an evaluation of an online course provided the basis for identifying these challenges.

Web accessibility means that persons with disabilities can use the web on an equal basis with others. Web accessibility is not interested in the specific conditions of people but the impact these conditions have on their ability to use the web [2].

There are several categories of disabilities: visual, motor, speech, cognitive, psychosocial, among others [3]. Disabilities can be permanent, temporary or situational [4]. There are several strategies to improve the level of accessibility of a Massive Open Online Course (MOOC). One option is to avoid certain types of features and content that is not readily accessible to people with disabilities. This is not a good solution because it leads to a general reduction of features offered to users in general. Another alternative is to develop a generic version of the MOOC and several alternative versions for different categories of disabilities. This path is not suitable, because the development, maintenance and support of multiple versions are expensive and in most cases not possible. Also, this does not solve the problem of segregation. A better strategy is to identify web accessibility problems of the MOOC features and content, so developers can solve them. In this research, we used three tools available for free on the Internet to detect accessibility barriers of the online course and establish recommendations to improve its accessibility. In the literature review, we found several experiences on using automatic tools to evaluate web accessibility. For example, [5] uses Examinator, Achecker, TAW, and HERA to evaluate educational institutions´ websites; [6] uses Total Validator and Functional Accessibility Evaluator to evaluate government websites. Nonetheless, we did not find previous use of automatic web accessibility tools applied to Geo-MOOCs.

II. FUNDAMENTALS

A. Massive Open Online Courses

MOOCs have two defining characteristics that differentiate them from earlier online courses. First, a MOOC is open and free of charge, meaning that anyone with Internet access and willing to learn can use it. Second, a MOOC is massive; meaning huge number of students can register tdo it [7]. Other than that, MOOCs don't differ much from other online courses: a syllabus, a calendar, educational materials –mostly video lectures-, learning activities, quizzes, and a forum to discuss with instructors and fellow learners. Geo-MOOCs is the generic name given to MOOCs on GIS, and includes also manipulation of maps and geo-referenced content [8].

B. Geographical Information Systems

GIS are computer applications that acquire, manipulate, manage, model and visualize geo-referenced data for solving social, environmental, climatological, hydrological, planning, management and economic issues to support decision-making [9]. The importance of GIS lies in its ability to maintain and use data with exact locations on Earth's surface, which has many applications in daily life. For example, draw a map to tell friends where it is a party, interpret the subway map to decide which train to take, drive a car using a Global Positioning System (GPS), or just pick a holiday route.

GIS software stores, manipulates, manages and displays geo-referenced data. The term geo-referenced mean that data include geographic coordinates (latitude and longitude). Geographical data have two components: spatial attribute that is location on Earth and no-spatial attributes like descriptive characteristics. For example, non-spatial attributes of a country are its name, customs, language, currency, among others. The spatial attributes are geographical coordinates in some reference system like Universal Transversal Mercator (UTM).

To visualize and manage geographical data exist two mechanisms: raster and vector. Raster data are all kind of images, such as satellite, aerial, JPG, PNG. Vector data are a collection of points, lines and polygons presented as overlay layers; possible extensions of this data type are shape file and KML.

C. Web Accessibility Automatic Evaluation Tools

Web accessibility means that persons with disabilities can perceive, understand, navigate, and interact with the web; as well they can contribute to the Web on an than others. Web accessibility evaluation tools are software programs or online services that help determine if a website meets accessibility guidelines [10].

Automatic evaluation tools help developers make their web applications more accessible. These tools identify accessibility problems in the content, semantic and structural elements of a web site. The automatic evaluation tools should support accessibility standards such as the Web Content Accessibility Guidelines (WCAG) 2.0 [11]. WCAG 2.0 includes principles and guidelines that help to develop web pages more accessible for all users, especially for persons with disabilities. The automatic evaluation tools not necessarily produce reliable results since not all the accessibility problems can be automatically detected. Besides, a tool can produce fail positives that need to be discarded by human evaluation [12]. These tools are best exploited when used by experts on the subject of accessibility. When developers don't have expertise in accessibility, they tend to rely on the tool results [13].

Automatic evaluation tools are a useful resource to identify accessibility problems but they cannot solve them. Developers have to solve them by making changes on the web pages to improve accessibility based on the evaluations results.

III. STUDY CASE

At present, there are few Geo-MOOCs, perhaps due to the difficulty of its development. An example of a Geo-MOOC is the course “Maps and the Geospatial Revolution”, developed by Penn State University. The course first offering was in July 2013. It had 30,000 students registered. The second offering is currently open for enrollment and begins on April 2014 [14]. This proves a great interest in Geo-MOOCs.

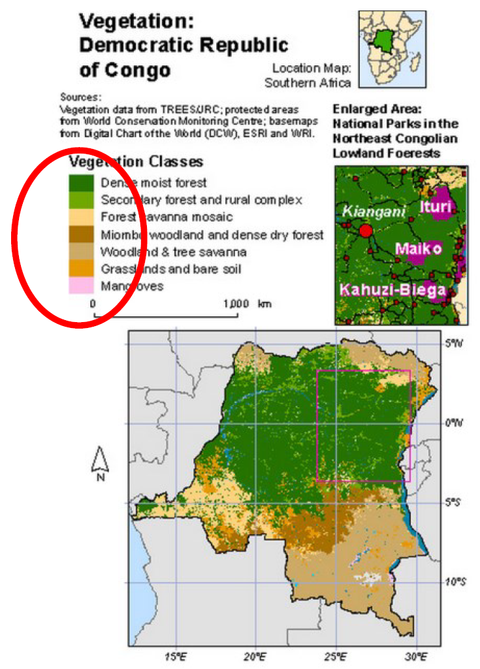

This Geo-MOOC presents some accessibility issues. As an example, Fig. 1 shows a vegetation map presented in Lesson 5 “Layout and Symbolization”. This map presents difficulties to be interpreted by users with color blindness because vegetation types are represented only by color. In this case, the WCAG 2.0 recommends in the guideline “1.4.1 Use of Color” to not use color as the only mechanism to convey information both in maps and figures.

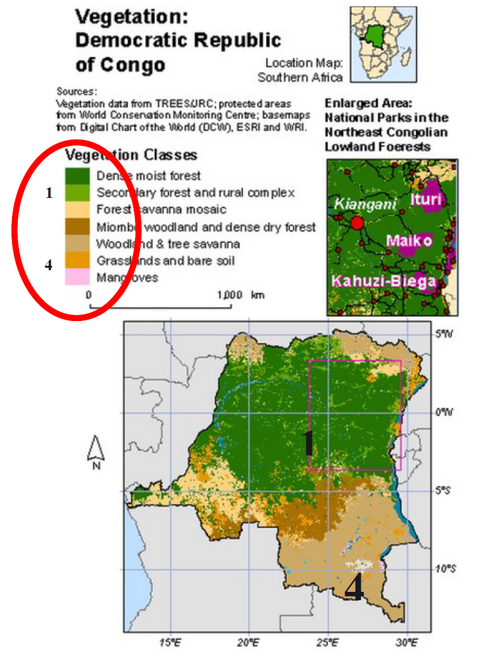

Fig. 2 shows one suggested solution to this problem: numeric codes can be added for each type of vegetation both on the map and the legend. This same solution can be applied to other types of multimedia content, such as images and graphs. In this same paper we have applied this approach to Fig. 7 to 10.

As already stated, the Geo-MOOC used as study case in this research is the course “Maps and the Geospatial Revolution”. Nevertheless, other Geo-MOOCs can also benefit from these recommendations.

IV. EVALUATION METHOD

The evaluation method used includes three steps: tools selection, web content and geographical data selection, and tabulation and analysis of results.

A. Tools Selection

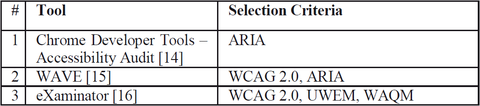

Table I shows the three tools selected for this study. The selection criteria were: availability –all of them are available for free in the internet, methodology -like Unified Web Evaluation Methodology (UWEM) [15], guidelines -like WCAG 2.0 [9], metrics -like Web Accessibility Quantitative Metric (WAQM) [16]; and technical specifications – like Accessible Rich Internet Application Accessibility (ARIA) [17].

Chrome Developer Tools are a set of web authoring and debugging tools built into Google Chrome. The Accessibility Audit is an extension designed to check compliance with ARIA [18].

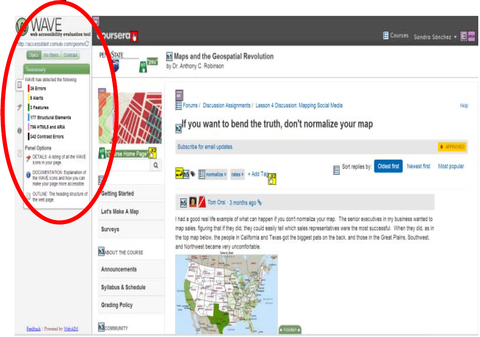

WAVE is an Application Program Interface (API) that allows automated and remote accessibility analysis of web pages using a processing engine. WAVE presents the analyzed web page with embedded icons and indicators. Each icon, box, and piece of information added by WAVE presents some information about the accessibility of the page. Red icons indicate accessibility errors. Green icons indicate accessibility features. WAVE uses WCAG 2.0 [19].

eXaminator is a service to evaluate the accessibility of a web page. It uses as reference WCAG 2.0 guidelines, techniques and failures. Each accessibility test provides a set of scores according to the impact for: blindness, limited vision, limited arms mobility, cognitive disabilities, and disabilities due to aging. These values are used to obtain an average global score [20].

When using automated tools, it is important to combine the results of more than one tool to provide complete results [12]. Moreover, we recommend a manual evaluation by an accessibility expert since even combined results are not enough.

B. Web Content and Geographical Data Selection

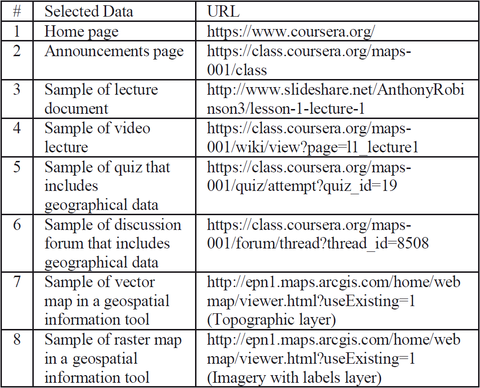

Web accessibility analysis was performed using a selection of web pages representative of the course “Maps and the Geospatial Revolution”, geographical data within the course and in the tool used for the lab exercises: Esri's ArcGIS Online [21]. Table II shows the web content and geographical data selected. The accessibility of the home page is very important since it is the first contact users have with the website. If the home page isn’t accessible, it would be very difficult or even impossible that users with disabilities can access other pages of the website. Therefore, it is essential to ensure the accessibility of the home page.

C. Results Tabulation and Analysis

We performed evaluations of the selected data with each tool and elaborated tables to summarize the results. Both the evaluation results and a preliminary analysis with findings have been included in the following section.

V. EVALUATION RESULTS

A. Chrome Developer Tools - Accessibility Audit Results

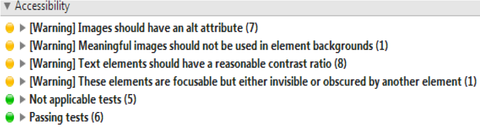

Fig. 3 and Fig. 4 show partial captures of Chrome Accessibility Audit Tool results for the Geo-MOOC´s home page and raster map.

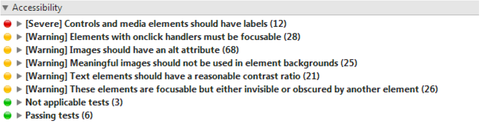

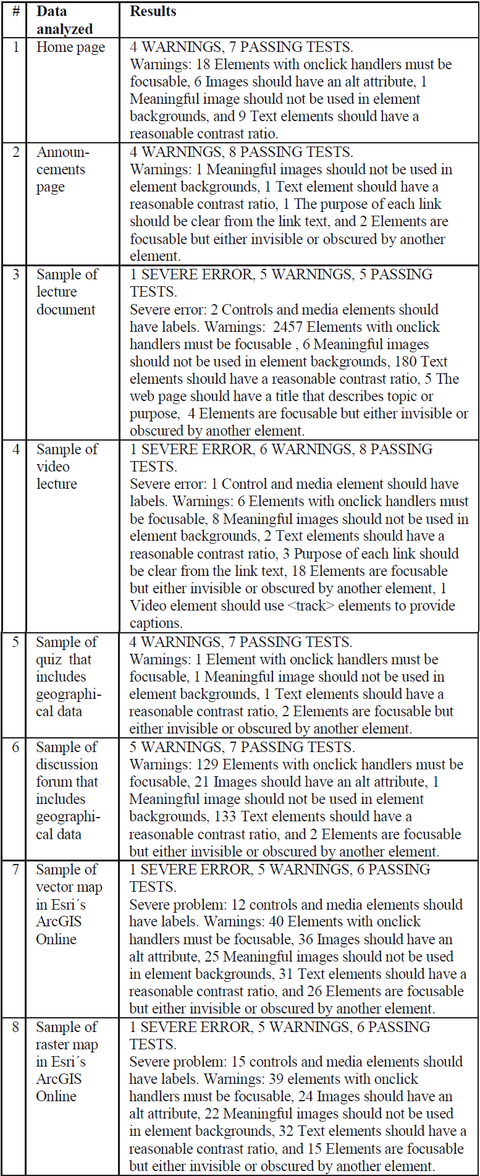

Table III summarizes the results obtained with Chrome Developer Tools – Accessibility Audit for the six types of data analyzed. The results include statistics of the number of rules violated (if any) as severe errors or warnings. It also states the number of passing tests. For each violation, it states how many elements on the page are involved.

Table III gives developers insight in how to improve accessibility of the Geo-MOOC. For example, in the result #4 "Sample of lecture video", the warning "1 Video element should use <track> elements to provide captions" draws attention on the use of the HTML5 <track> label on video elements to include alternative audio tracks or captions in multiple languages within the <video> label.

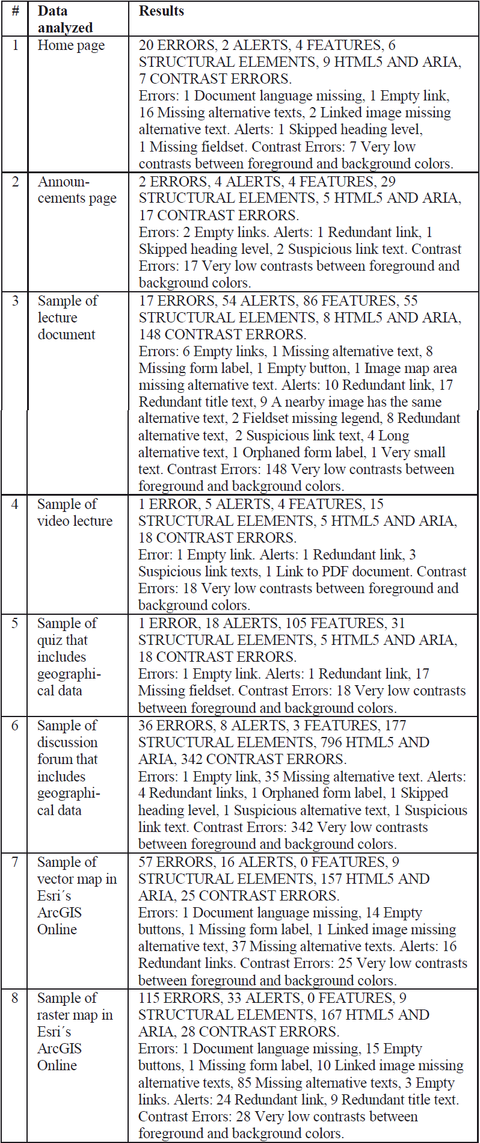

B. WAVE Results

Fig. 5 shows a partial capture of WAVE Tool results for the Geo-MOOC´s discussion forum page. We found out that WAVE cannot display the analysis of a raster map properly.

Table IV summarizes the results obtained with WAVE for all the data analyzed. The results include statistics of the number of rules violated (if any) as errors, alerts or contrast errors. It also states the number of features, structural elements and HTML5/ARIA tags used. For each violation, it states how many occurrences were detected.

Table IV gives developers additional insight in how to improve accessibility of the Geo-MOOC. For example, the definition of the document language is missing in the home page, the sample of vector map and the sample of raster map. Without this information, assistive technology such us screen readers cannot work properly.

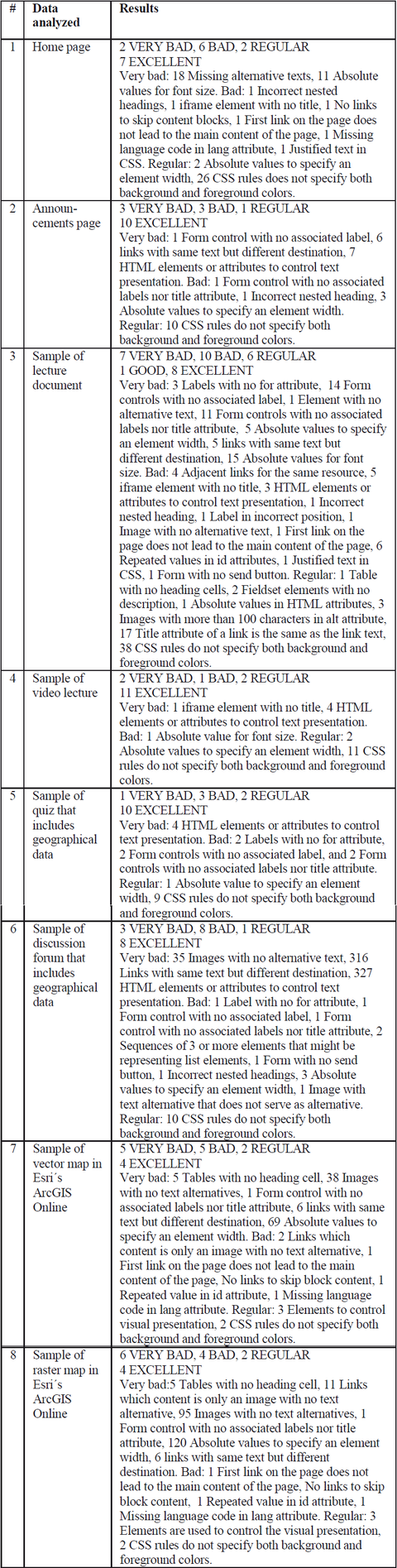

C. eXaminator Results

Fig. 6 shows a partial capture for eXaminator results for a sample of vector map. This tool provides scores for five different types of disabilities: blindness, limited vision, limited arms mobility, cognitive disabilities, and disabilities due to aging. eXaminator user interface's language is Spanish.

Table V summarizes the results obtained with eXaminator for all the data analyzed. The results include statistics of the number of rules violated (if any) as very bad, bad, and regular. It also states the number of excellent and good features found. For each violation, it states how many occurrences were detected.

Table V also gives developers insight in how to improve accessibility of the Geo-MOOC. For example, the home page, announcements page, sample of video lecture and sample of discussion forum have incorrect nested headings. This prevent assistive technology, such us screen readers, from knowing correct order in which the content should be presented to users.

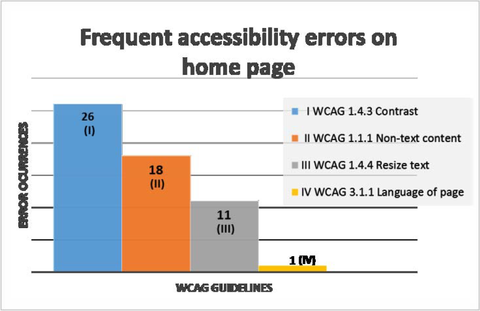

D. Preliminary Analysis and Findings

In this section, we present the more frequent accessibility errors in the pages and maps evaluated, as well as some findings.

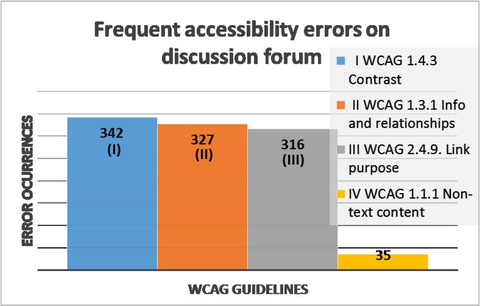

Fig. 7 and Fig. 8 illustrate frequent accessibility errors found in the home page and discussion forum of the Geo- MOOC when using the automatic tools. For each error found, we present the associated WCAG 2.0 Guideline and the number of occurrences.

The most frequent error in web pages refers to lack of adequate contrast between background and foreground colors (WCAG 1.4.3). This affects to users with vision impairment.

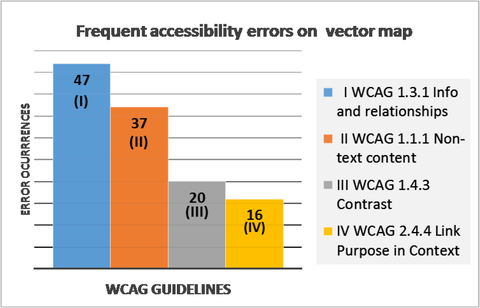

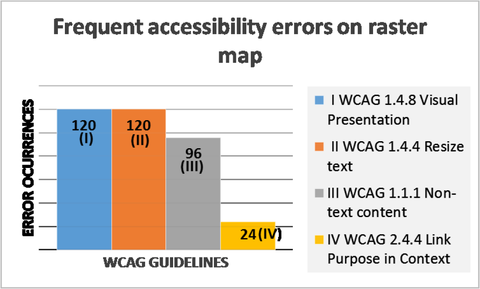

Fig. 9 and Fig. 10 illustrate frequent accessibility errors found in the vector and raster maps of the Geo-MOOC. For each error found, we present the associated WCAG 2.0 Guideline and the number of occurrences.

The most frequent error in maps refers to the resizing capability (WCAG 1.4.4). This affects to users with vision impairment.

Finally, the most frequent ARIA accessibility errors found were: images should have an alt attribute, text elements should have a reasonable contrast ratio, meaningful images should not be used in element backgrounds, and elements are focusable but either invisible or obscured by another element.

VI. CONCLUSIONS

The results presented by the three tools used differ among them. For example, let´s take the home page´s lack of alternative text for images. For this particular error, eXaminator found 18 occurrences, WAVE 16, and Chrome only 6. WAVE identified 2 additional occurrences as the associated error “Linked image missing alternative text”.

Chrome Developer Tools – Accessibility Audit check accessibility issues based on 12 rules that include: five from ARIA, two related to texts, one related to images, two related to focus, one related to color, and one related to video. Chrome was the only tool that detected the error “Video element should use <track> elements to provide captions” in the Geo-MOOC´s sample video lecture page analyzed.

WAVE’s strong feature is the inclusion of detailed types of errors related to links and images alternative texts.

In general, eXaminator reports more accessibility errors than the other tools. The reason might be that eXaminator checks not only WCAG 2.0 guidelines but also techniques and failures.

There are errors that none of the tools detected. For example, in a Geo-MOOC’s vegetation map, vegetation types were represented only by color. This represents an accessibility issue for color-blindness users.

Future work includes additional accessibility testing of Geo-MOOCs performed by users with different type of disabilities to complement manual results by experts and automated results by tools.

ACKNOWLEDGMENT

This work has been partially supported by the Prometeo Project by SENESCYT, Ecuadorian Government.

REFERENCES

[1] United Nations, “Convention on the Rights of Persons with Disabilities and Optional Protocol”, pp. 16-18, 2008.

[2] J. Carter and M. Marker, “Web accessibility for people with disabilities: an introduction for Web developers”, IEEE Transactions on Professional Communication, vol. 44 (4), pp. 225-233, 2001.

[3] S. Burgstahler, “Real connections: Making distance learning accessible to everyone”, 2002. Available online: http://www3.cac.washington.edu/doit/Brochures/PDF/distance.learn.pdf

[4] G. Farrelly, “Practitioner barriers to diffusion and implementation of web accessibility”, Technology and Disability, vol. 23 (4), pp. 223-232, 2011.

[5] H. Amado-Salvatierra, B. Linares, I. García, L. Sánchez, and L. Rios, “Analysis of Web Accessibility and Affordable Web Design for partner institutions of ESVI-AL Project”, Application of Advanced Information and Comunication Technology, pp. 54-61, 2012.

[6] M. Bakhsh and A. Mehmood, “Web Accessibility for Disabled: A Case Study of Government Websites in Pakistan”, Frontiers of Information Technology, pp. 342-347, 2012..

[7] J. Kay, P. Riemann, E. Diebold and B. Kummerfeld, “MOOCs: So Many Learners, So Much Pot ential”, IEEE Intelligent Systems, vol. 28 (3), pp. 70-77, 2013.

[8] A. Schutzberg, “Building the World’s First Geo-MOOC: An Interview with Penn State’s Anthony Robinson”, 2013. Available online: http://www.directionsmag.com/podcasts/building-the-worlds-first-geomooc- an-interview-with-penn-states-antho/311753

[9] J. Wooseob, “Spatial perception of blind people by auditory maps on a tablet PC”, Proceedings of the American Society for Information Science and Technology, vol. 44 (1), pp 1–8, 2008.

[10] W3C, “Introduction to Web Accessibility”, 2012. Available online: http://www.w3.org/WAI/intro/accessibility.php.

[11] W3C, “Web Content Accessibility Guidelines (WCAG) 2.0.”, 2008. Available online: http://www.w3.org/TR/WCAG20/.

[12] M. Vigo, J. Brown, and V. Conway., “Benchmarking web accessibility evaluation tools: measuring the harm of sole reliance on automated tests”, Proceedings of the 10th International Cross-Disciplinary Conference on Web Accessibility, 2013.

[13] K. Grooves, “Choosing an Automated Accessibility Testing Tool: 13 Questions you should ask”, 2013. Available online: http://www.karlgroves.com/2013/06/28/choosing-an-automatedaccessibility- testing-tool-13-questions-you-should-ask/

[14] Coursera, “Maps and the Geospatial Revolution”, 2014. Available online: https://class.coursera.org/maps-001/class

[15] E. Velleman, and C. Strobbeet, “Unified Web Evaluation Methodology (UWEM)”. Technical Report WAB Cluster, 2006.

[16] M. Vigo, M. Arrue, G. Brajnik, R. Lomuscio, and J. Abascal, “Quantitative metrics for measuring web accessibility”, Proceedings of International Cross-disciplinary Conference on Web Accessibility, pp 99-107, 2007.

[17] W3C, “WAI-ARIA Overview”, 2014. Available online: http://www.w3.org/WAI/intro/aria

[18] Google, “Accessibility Developer Tools”, 2012. Available online: https://chrome.google.com/webstore/detail/accessibility-developert/ fpkknkljclfencbdbgkenhalefipecmb?hl=en

[19] WebAIM, “Web Accessibility evaluation Tool.”, 2012. Available online: http://wave.webaim.org

[20] Benavidez, C., “Automatic Evaluation of Accessibility”, 2014. Available online: http://examinator.ws/ ESRI, “ArcGIS Online”, 2014. Avaliable online: http://www.arcgis.com/home/

More information: Web Accessibility Evaluation of Massive Open Online Courses on Geographical Information Systems